The modern internet is the largest public data source in human history, but most of that data is unstructured and locked away in website code. This is the core problem web scraping solves: it’s the automated process of extracting public data from websites and transforming it into a clean, structured format you can actually use.

For a beginner, web scraping is a game-changing skill. It turns the entire web into a personal database, empowering you to gather competitor pricing, monitor market trends, or collect data for a personal project without spending countless hours manually copying and pasting. This guide will walk you through the entire process, from understanding the fundamentals to building a robust scraper that handles real-world challenges.

Why Web Scraping Is an Essential Modern Skill

The internet is overflowing with valuable data, but the real challenge lies in collecting it efficiently. Web scraping provides a strategic advantage by automating the tedious work of data collection, freeing you to focus on analysis and action.

Imagine an e-commerce manager automatically tracking competitor prices to adjust their own in near real-time. Or a marketer monitoring brand sentiment across dozens of social platforms without checking a single post. These aren't just abstract ideas for large corporations; they are practical, achievable goals for anyone willing to learn the basics.

A Skill for Any Field

Web scraping applications are surprisingly broad and valuable across numerous fields:

- Business Intelligence: Gather market trends, monitor competitors, and identify new business opportunities before they become obvious.

- Academic Research: Collect massive public datasets for scientific studies, linguistic analysis, or social science research.

- Personal Projects: Build a custom price tracker for your favorite products or aggregate news from multiple sources into a single, clean feed.

This isn’t a niche skill for developers. The global web scraping market is exploding, projected to nearly double from $1.03 billion in 2025 to $2 billion by 2030. This growth is driven by an insatiable need for real-time data, with over 65% of global enterprises now using data extraction tools. Price and competitive monitoring is the fastest-growing use case, highlighting how critical scraping has become for business agility. You can discover more industry benchmarks to see how it's become a core business strategy.

Ultimately, learning to scrape the web is an investment in your ability to make smarter, faster decisions. It shifts you from being a passive consumer of information to an active user who can pull exactly the data needed, right when it's needed.

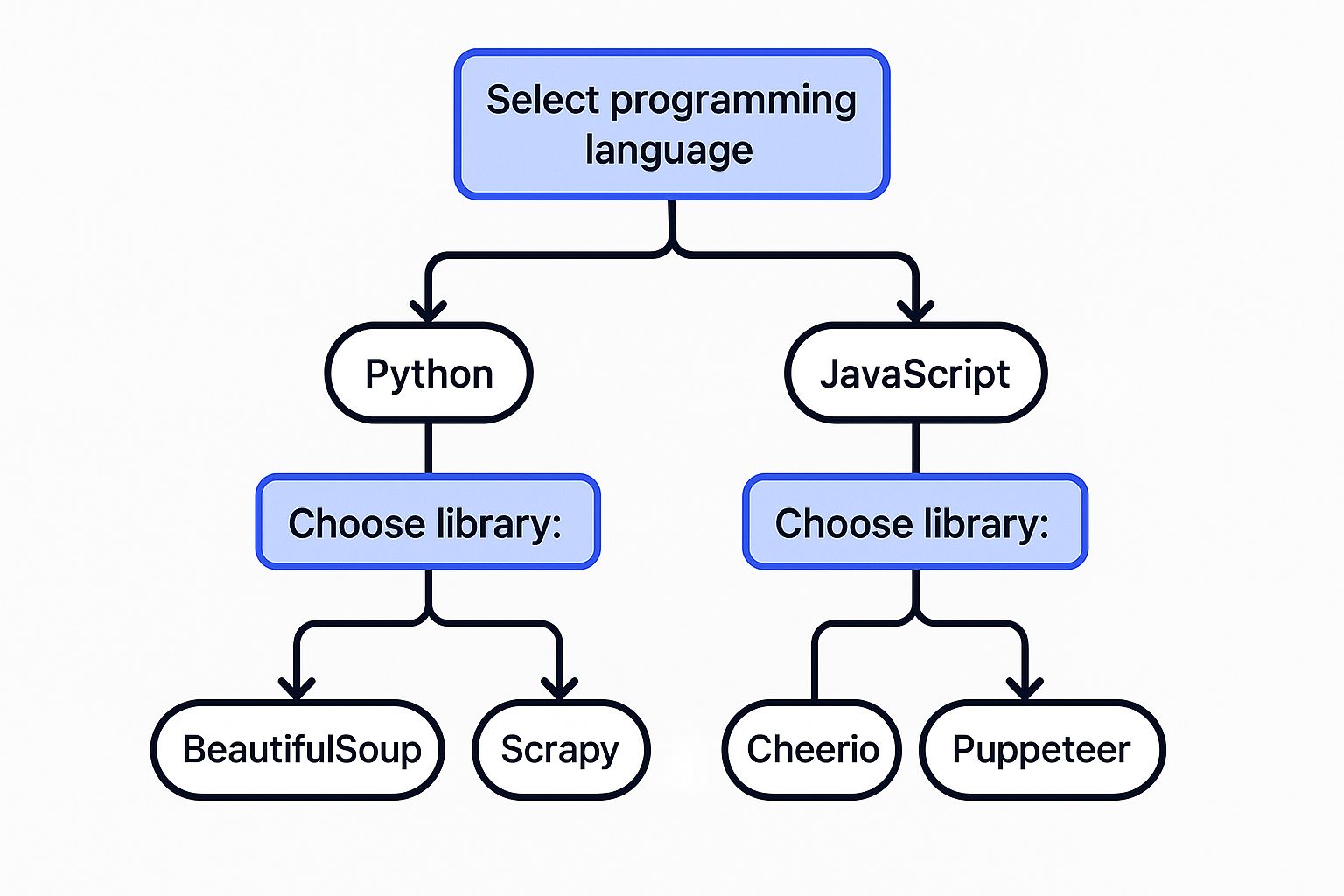

Choosing the Right Approach for Your First Project

Stepping into web scraping can feel like walking into a hardware store for the first time—the sheer number of tools is overwhelming. However, the choice boils down to three main paths: building a scraper yourself with code, using a visual "no-code" tool, or leveraging a web scraping API.

Each approach is valid, but the best one for you depends on your technical comfort, project goals, and scalability needs.

Comparing Web Scraping Methods

To clarify the choice, let's break down the pros and cons of each method. This table provides an at-a-glance comparison.

| Approach | Technical Skill Required | Maintenance Effort | Scalability | Best For |

|---|---|---|---|---|

| DIY (Python Libraries) | High (Coding required) | High (Constant updates needed for site changes) | High (Requires expertise in anti-bot techniques) | Learning to code, custom projects, full control. |

| Visual "No-Code" Tools | Low (Point-and-click interface) | Low | Low to Medium | Simple, one-off tasks and non-technical users. |

| Web Scraping APIs | Medium (Basic API knowledge) | None (Handled by the service provider) | Very High | Beginners wanting reliable data quickly; scalable projects. |

As you can see, there’s a clear trade-off between control, convenience, and scalability.

Path 1: DIY with Python

Building a scraper from scratch with Python gives you ultimate control and is a fantastic way to learn the nuts and bolts of how the web works. A 2025 study found that nearly 70% of developers use Python for scraping tasks, thanks to its clean syntax and powerful libraries like requests (for fetching pages) and BeautifulSoup (for parsing HTML).

However, the DIY route is challenging. You'll spend significant time on setup, coding, and—the most time-consuming part—maintaining the scraper. Websites change their structure frequently, which breaks your code. You are also solely responsible for managing anti-bot measures like proxies and headers. For more context, you can explore these web scraping trends and statistics.

Path 2: Visual Scraping Tools

If coding isn't your current focus, visual scrapers offer an easy on-ramp with a point-and-click interface. They are great for grabbing data from simple sites for a one-time project. The downside? They often struggle with complex, JavaScript-heavy websites and are not built for large-scale or frequent scraping tasks.

Path 3: Web Scraping APIs (Our Recommended Approach)

This brings us to the third path: web scraping APIs. A service like the Olostep API provides the perfect middle ground for beginners. You skip the frustrating backend work—like managing proxies to avoid getting blocked, solving CAPTCHAs, or rendering JavaScript—and get clean data with a simple API call. It’s ideal for beginners who need reliable results without becoming an expert in anti-bot countermeasures.

For a more comprehensive look at the different services available, our guide on the top scraping tools is a great next stop.

Key Takeaway: Your best starting point depends on your goals. To learn programming fundamentals, build it yourself. For fast, reliable data without the maintenance headache, a web scraping API is the most efficient path forward.

Step-by-Step Guide: Building Your First Web Scraper with Python and Olostep API

Theory is great, but let's get our hands dirty and pull data from a live website. We will write a complete Python script that uses the Olostep API to handle the complexities of fetching a web page, allowing us to focus on making the request and processing the response.

Our toolkit is simple: Python and the requests library. It's a classic combination for a reason: it’s powerful, straightforward, and you'll have a working scraper in minutes.

1. Setting Up Your Python Environment

Before writing code, ensure you have the necessary tools. You only need a working Python installation and the requests library.

If requests is not installed, open your terminal or command prompt and run this simple command:

pip install requestsThis command tells pip, Python's package manager, to download and install the library. Once it completes, you're ready.

2. Crafting a Robust API Request

Our mission is to send a POST request to the Olostep API endpoint: https://api.olostep.com/v1/scrapes. Unlike a GET request that just fetches a page, a POST request sends a data payload to the server. Our payload will tell Olostep which website to scrape for us.

Let's build a script you can copy, paste, and run. We’ll target a simple e-commerce product page designed for practice and include essential features like error handling and retries.

import requests

import json

import os

import time

# 1. Configuration

API_ENDPOINT = "https://api.olostep.com/v1/scrapes"

# Best practice: Store API keys as environment variables

API_KEY = os.environ.get("OLOSTEP_API_KEY")

TARGET_URL = "https://books.toscrape.com/catalogue/a-light-in-the-attic_1000/index.html"

if not API_KEY:

raise ValueError("The OLOSTEP_API_KEY environment variable is not set.")

# 2. Define Headers for Authentication and Content Type

# Complete headers for the request

headers = {

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json",

"Accept": "application/json"

}

# 3. Create the Payload

# Complete payload for the request

payload = {

"url": TARGET_URL

}

# 4. Implement Request Logic with Error Handling and Retries

max_retries = 3

retry_delay = 5 # seconds

for attempt in range(max_retries):

try:

print(f"--- Attempt {attempt + 1} of {max_retries} ---")

# Send the POST request with a reasonable timeout

response = requests.post(

API_ENDPOINT,

headers=headers,

json=payload,

timeout=30 # 30-second timeout

)

# Raise an exception for bad status codes (4xx or 5xx)

response.raise_for_status()

# 5. Process the Successful Response

print(f"Success! Received status code: {response.status_code}")

# The raw HTML content is in the response body

# For Olostep's API, the content is directly available.

# Let's inspect the response headers and content.

print("\nResponse Headers:")

for key, value in response.headers.items():

print(f" {key}: {value}")

# The JSON response contains the scraped data

response_data = response.json()

html_content = response_data.get('content') # Assuming content is in the 'content' key

if html_content:

print("\n--- First 500 characters of HTML content: ---")

print(html_content[:500])

else:

print("\n--- Response JSON (No 'content' key found): ---")

print(json.dumps(response_data, indent=2))

break # Exit the loop on success

except requests.exceptions.HTTPError as e:

print(f"HTTP Error: {e}")

# Specific handling for 4xx client errors vs 5xx server errors

if 400 <= e.response.status_code < 500:

print("Client error - check your request payload and API key. No retry needed.")

break

elif 500 <= e.response.status_code < 600:

print("Server error - retrying...")

time.sleep(retry_delay * (attempt + 1)) # Exponential backoff

except requests.exceptions.Timeout:

print("Request timed out. Retrying...")

time.sleep(retry_delay)

except requests.exceptions.RequestException as e:

print(f"An unexpected request error occurred: {e}")

break # Exit on other critical errors

else:

print("All retry attempts failed. Please check your connection and the API status.")Security Tip: Notice the use of

os.environ.get("OLOSTEP_API_KEY"). Never hardcode API keys or other secrets directly in your code. Storing them as environment variables is a much safer practice that separates credentials from your script.

Deconstructing the Request and Response

Let's break down what just happened.

The Request: Our script constructed a complete HTTP POST request.

- Headers:

{ "Authorization": "Bearer YOUR_API_KEY", "Content-Type": "application/json", "Accept": "application/json" } - Payload (Body):

{ "url": "https://books.toscrape.com/catalogue/a-light-in-the-attic_1000/index.html" }

The Response: Olostep took this request, navigated the complexities of fetching the page—rotating proxies, mimicking a real browser, and executing JavaScript if needed—and returned a JSON object.

- Successful Response (200 OK):

A successful response will have a

200 OKstatus code and a JSON body containing the raw HTML of the target page. A simplified example of the response payload would look like this:{ "content": "<!DOCTYPE html><html><head>...</head><body>...</body></html>", "status_code": 200, "url": "https://books.toscrape.com/catalogue/a-light-in-the-attic_1000/index.html" }

This raw HTML is now yours to command. You can parse it to extract specific details like the book's title, price, or description. It is the foundational step for more advanced tasks and a perfect example of how you can turn websites into LLM-ready data. As you collect different data types, such as emails, a good developer's guide to validate email in Python can be a lifesaver for data cleaning.

Navigating Anti-Bot Measures and Common Failures

That first successful scrape is an amazing feeling. But then you run the script against a more complex site and... nothing. An error page, a strange response, or just complete silence. Welcome to the world of anti-bot measures. Every scraper encounters them.

Don't get discouraged. Websites have valid reasons for these roadblocks, like preventing server overload and protecting data. Learning to navigate these hurdles is a core scraping skill. Most defenses try to distinguish between a real person and an automated script.

Troubleshooting Common HTTP Error Codes

The first sign of trouble is often an HTTP status code. You want a 200 OK, but you might get one of these:

- 403 Forbidden: The server understood your request but is refusing to serve it. This often means your IP address, user-agent, or other request headers look suspicious.

- 429 Too Many Requests: You’re sending requests too quickly. This is a clear signal to implement rate limiting and add delays (

time.sleep()) between your requests. - 5xx Server Errors (500, 502, 503): These indicate a problem on the server's end. While not always your fault, aggressive scraping can sometimes trigger these. Implementing retries with exponential backoff is the standard solution.

- Timeouts: Your request took too long to complete. This can be due to a slow server, network issues, or complex pages. A good timeout value (e.g., 30 seconds) in your request is crucial.

Anti-Scraping Techniques and How to Overcome Them

Websites use a deep arsenal to detect and block scrapers. Here's how to build a more resilient scraper.

1. IP Address Blocking and Proxies

- Problem: Sending many requests from the same IP address is a dead giveaway. The site will quickly block that IP.

- Solution: Use a rotating proxy service. Proxies mask your real IP by routing your request through a different server. A large pool of residential or datacenter proxies ensures each request appears to come from a different user, making you much harder to detect.

2. User-Agent and Header Management

- Problem: HTTP requests include headers, such as the

User-Agent, which identifies your browser. A blank or default user-agent (likepython-requests/2.28.1) is an immediate red flag. - Solution: Rotate through a list of common, real-world User-Agent strings. Mimic other browser headers like

Accept-Language,Accept-Encoding, andRefererto appear more human.

3. Rate Limiting and Delays

- Problem: Firing requests as fast as your script can run is not how humans browse. This behavior is easily detected and will trigger a

429error. - Solution: Scrape politely. Introduce random delays (

time.sleep(random.uniform(1, 5))) between requests to mimic human browsing speed and reduce server load.

4. CAPTCHAs

- Problem: These "Completely Automated Public Turing tests to tell Computers and Humans Apart" are designed specifically to block bots.

- Solution: Solving CAPTCHAs programmatically is extremely difficult. The best strategy is to avoid triggering them in the first place by using high-quality residential proxies and human-like headers. If unavoidable, you can use third-party CAPTCHA-solving services, but this adds complexity and cost.

5. JavaScript Rendering and Browser Fingerprinting

- Problem: Many modern sites use JavaScript to load content dynamically. A simple HTTP request won't see this content. Furthermore, sites can analyze details like your screen resolution, fonts, and plugins to create a unique "browser fingerprint."

- Solution: Use a tool that can render JavaScript. This can be a headless browser automation library like Selenium or Playwright, or, more simply, a web scraping API that handles "headless browsing" for you.

Key Takeaway: The goal is not to brute-force your way past these systems but to scrape politely and mimic human behavior. This is precisely where a service like Olostep excels. It automatically manages a massive pool of residential proxies, crafts requests with optimized browser headers, and renders JavaScript, handling these challenges for you. For a deeper dive, read our guide on how to scrape without getting blocked.

Scraping Responsibly: Ethical and Legal Guidelines

Just because you can scrape a website doesn't always mean you should. Understanding the ethical and legal boundaries is as critical as writing your code. This is not formal legal advice but a practical framework for responsible data collection.

1. Check robots.txt First

Before scraping any site, check its robots.txt file (e.g., example.com/robots.txt). This file outlines the rules for bots. While not legally binding, ignoring it is a major ethical breach and a poor practice. If a site explicitly disallows scraping a directory in robots.txt, respect its wishes.

2. Don't Overload the Server

Be a good internet citizen. Don't bombard a server with rapid-fire requests. This can slow down or crash the site for human users and is a surefire way to get blocked. Throttle your requests with delays and scrape during off-peak hours if possible.

3. Avoid Scraping Personal Data

This is the brightest red line. Scraping personally identifiable information (PII)—names, emails, phone numbers—is heavily regulated by laws like the GDPR in Europe and the CCPA in California.

Crucial Takeaway: As a beginner, the safest and most ethical path is to avoid scraping personal data entirely. Stick to public, non-sensitive information like product prices, stock levels, or news articles. The legal and financial penalties for violating data privacy laws are severe.

4. Use Public APIs When Available

Many websites offer a public API (Application Programming Interface) as the preferred way to access their data. If a site provides a documented API, always use it instead of scraping. It’s more reliable, stable, and shows respect for the data provider.

Comparing Olostep with Other Scraping APIs

Choosing the right API can make or break your first project. You need something powerful enough for real-world sites but simple enough to avoid getting bogged down in complex configurations. Let’s see how Olostep stacks up against other popular services like ScrapingBee, Bright Data, ScraperAPI, and Scrapfly.

The Landscape of Scraping APIs

Most scraping APIs solve the same core problems: proxy rotation, CAPTCHA handling, and JavaScript rendering. The key differentiators are ease of use, pricing models, performance, and the developer experience.

One service might have a massive proxy network but a confusing credit-based system. Another might have a simple API but a restrictive free plan. To cut through the noise, here's a side-by-side comparison of leading web scraping APIs.

Feature Comparison of Popular Web Scraping APIs

| API Service | Free Tier Offering | JavaScript Rendering | Proxy Management | Beginner Friendliness |

|---|---|---|---|---|

| Olostep | Generous credits (500 free requests) | Yes, simple boolean parameter | Fully automated | Very High |

| ScrapingBee | 1,000 API calls | Yes, credit-based | Fully automated | High |

| Bright Data | Pay-as-you-go model, free trial available | Yes, part of a larger suite of tools | Extensive, highly configurable | Medium |

| ScraperAPI | 5,000 free requests (with limitations) | Yes, premium feature | Fully automated | High |

| Scrapfly | 1,000 free credits | Yes, built-in | Fully automated, with session management | High |

Where Olostep Fits In

Olostep was designed specifically to provide a smooth on-ramp for developers and beginners. Here’s what makes it a great starting point:

- Simplicity and Focus: We built Olostep around a single, powerful API endpoint. There's no confusing maze of products or pricing tiers. You send a URL; you get clean data back. It’s that simple.

- Generous Free Tier: With 500 free requests, you have ample runway to build a proof-of-concept, complete a personal project, or simply learn without any financial commitment.

- Transparent Usage: The API is predictable. Need JavaScript rendering? Just add a simple

render=trueflag. This clarity means no hidden costs or confusing credit calculations.

Our Perspective: While services like Bright Data are incredibly powerful for massive, enterprise-level jobs, their complexity can be a roadblock for beginners. Tools like ScrapingBee, ScraperAPI, and Scrapfly are also excellent choices. We specifically engineered Olostep to flatten the learning curve, making it the perfect launchpad for your first web scraping projects.

Final Checklist and Next Steps

You've learned the theory, built a scraper, and understand the challenges. Here’s a concise checklist for your future projects:

- Define Your Goal: What specific data do you need?

- Check

robots.txt: Always verify the site's scraping policies first. - Choose Your Tool: DIY, visual tool, or an API like Olostep?

- Write Your Code: Start with a simple request to a single page.

- Implement Error Handling: Add

try...exceptblocks for HTTP errors and timeouts. - Add Retries: Use a loop with exponential backoff for server-side errors.

- Parse the Data: Use a library like

BeautifulSouporlxmlto extract the information you need from the returned HTML. - Scrape Politely: Add delays between requests.

- Store Your Data: Save the extracted data in a structured format like CSV or JSON.

Next Steps:

- Learn to Parse HTML: Now that you can fetch raw HTML, learn how to use Python's

BeautifulSouplibrary to navigate the HTML tree and extract specific elements. - Scrape Multiple Pages: Modify your script to loop through a list of URLs or follow "next page" links.

- Handle JavaScript-Heavy Sites: Experiment with the JavaScript rendering feature of your chosen API to tackle dynamic websites.

Ready to start pulling data without the usual headaches? Olostep provides a powerful API that handles proxies, JavaScript rendering, and anti-bot systems, so you can focus on the data. Get started for free today and turn any website into clean, structured data in minutes.