Every modern business needs data, but it's often locked away on websites. How do you extract it efficiently and reliably? The core problem is that websites are designed for human interaction, not automated scripts. A simple request might work initially, but you'll soon face a wall of anti-bot measures like IP blocks, CAPTCHAs, and dynamic JavaScript content that breaks basic scrapers. This leaves you with a critical decision: build a complex, high-maintenance solution from scratch, or leverage a specialized API that handles these challenges for you.

In this guide, we'll explore both paths. First, we'll look at the DIY approach using Python libraries to understand the fundamental challenges. Then, we'll provide a step-by-step solution using a robust scraping API, complete with practical code examples, error handling, and strategies for bypassing sophisticated anti-bot defenses.

Why Businesses Scrape Data from Websites

In an economy that runs on data, being able to pull clean, structured information from the web is a massive advantage. Web scraping is the engine behind countless data strategies, helping companies gather intelligence for market research, keep a close eye on competitor pricing, and build huge datasets to train their AI models.

But getting that data isn't as simple as just asking for it. This is where companies hit a fork in the road:

- Build a custom script: This path offers total control. Using libraries like Python's Requests and Beautiful Soup, you can fine-tune every part of the scraper. However, this approach makes you solely responsible for overcoming all anti-scraping measures.

- Use a scraping API: This is the "let someone else handle it" approach. You offload all the tricky backend work—like managing proxies, solving CAPTCHAs, and rendering JavaScript—to a service built for this purpose.

While building your own tool sounds great in theory, the reality is that modern websites are designed to shut down automated traffic. A simple script can quickly balloon into a monster project involving IP rotation, user-agent management, and tricky browser fingerprinting. This is where an API really starts to shine.

The Growing Demand for Web Data

The global web scraping market is on a tear, valued at roughly $1.03 billion in 2025 and expected to nearly double to $2.0 billion by 2030. That growth shows just how vital web data has become.

E-commerce alone drives about 48% of all scraping activity as businesses fight to keep track of products and prices. It's no surprise that around 65% of global companies now use data extraction tools to pull public information for their strategic planning. These industry benchmarks really paint a picture of where things are headed.

DIY Scripts vs. Scraping APIs: A Quick Comparison

Deciding between building your own scraper and using an API can be tough. The DIY approach gives you complete control, but it also means you're responsible for every little detail. An API abstracts away the complexity, letting you focus just on the data.

Here’s a quick breakdown to help you see the difference.

| Feature | DIY Scraping (e.g., Requests + Beautiful Soup) | Scraping API (e.g., Olostep) |

|---|---|---|

| Initial Setup | Requires coding knowledge and environment setup. | Simple API key integration. |

| Proxy Management | You have to source, manage, and rotate IPs yourself. | Handled automatically with large residential proxy pools. |

| CAPTCHA Solving | Needs integration with third-party solving services. | Often built-in and handled seamlessly. |

| Maintenance | Constant updates needed to adapt to site changes. | The API provider handles all maintenance. |

| Scalability | Limited by your own infrastructure and budget. | Built for high-volume, concurrent requests. |

| Cost | Low initial cost, but high long-term maintenance. | Subscription-based; predictable and cost-effective at scale. |

Ultimately, while a DIY script is a great learning experience, a managed API is almost always the more practical choice for any serious, long-term project.

Understanding how to scrape data effectively is a crucial first step toward setting up powerful workflow automation strategies that save businesses time and money. The data you collect can be piped directly into automated systems for analysis, reporting, and even training the next wave of AI.

For those of you working with large language models, our guide on how to turn websites into LLM-ready data dives deeper into prepping your scraped content for AI applications.

Setting Up Your First Scraping Request

Let's get our hands dirty and make our first API call. Before writing any code, you need credentials. Head over to the Olostep website and sign up. You’ll get a free account with 500 credits, which is plenty to get started.

Once you’re in, go to your dashboard. Your unique API key will be available there. This key authenticates every request you make, so treat it like a password and keep it safe. The best practice is to store it as an environment variable rather than hardcoding it in your scripts.

With your API key in hand, you're ready to start talking to the API endpoint.

Understanding the API Endpoint and Payload

Every request you send will be a POST request to a single URL: https://api.olostep.com/v1/scrapes. The real magic happens in the JSON payload you send with it. This payload is your instruction manual, telling the API exactly what you need it to do.

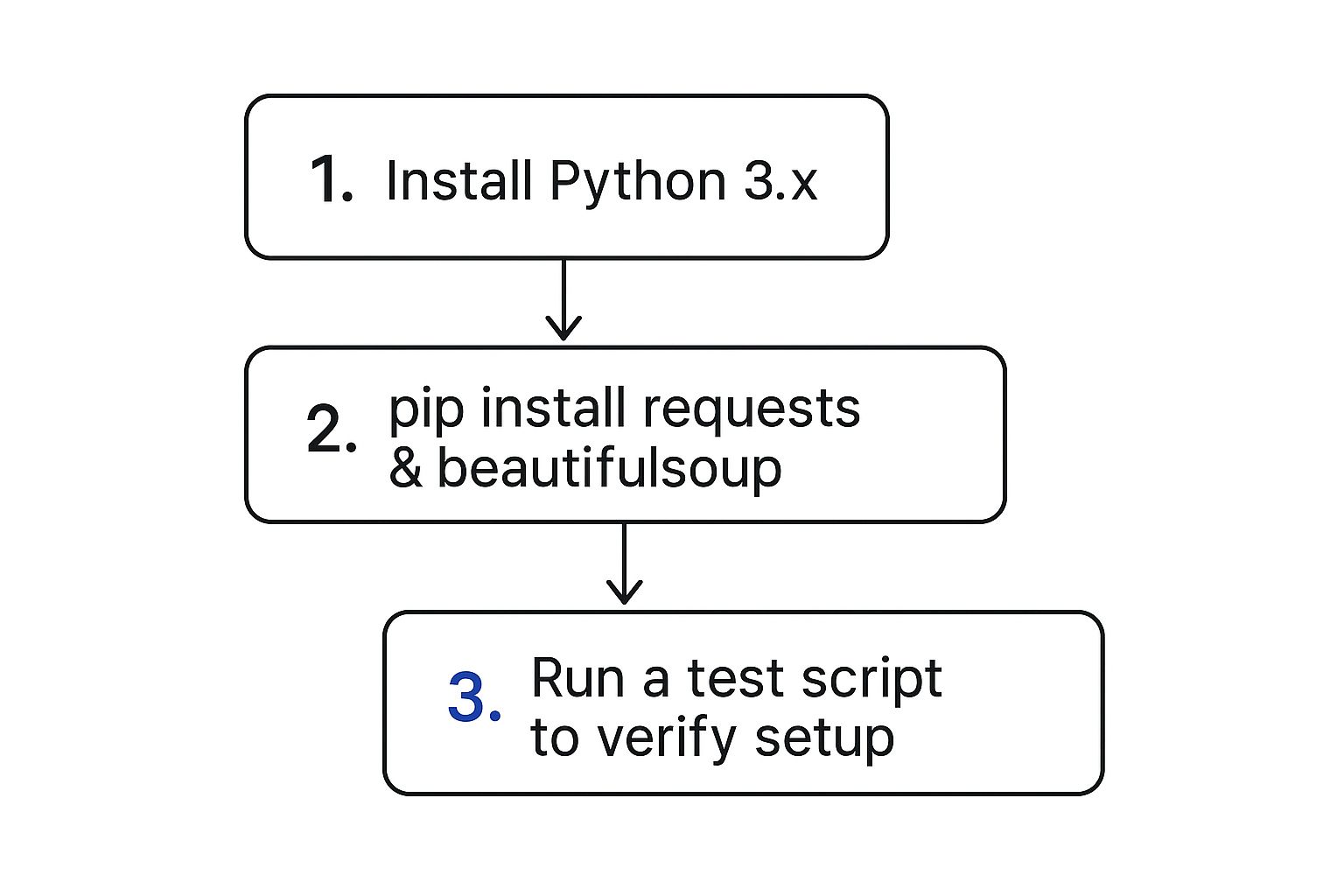

This handy visual breaks down the basic flow, from getting your local environment ready to firing off a test script.

As you can see, having a solid local setup with Python and the requests library is the foundation for working with just about any web scraping API out there.

The payload is where you’ll define the nitty-gritty of your scraping job. While the API has many options, a few core parameters are essential for nearly every request.

url: The direct link to the webpage you want to scrape. This is mandatory.method: The HTTP method to use. For fetching page content, you'll almost always useGET.headers: An object where you can pass HTTP headers. A common one is theUser-Agentto mimic a real browser.

Key Takeaway: It boils down to three things: your API key gets you in the door, the endpoint (

/v1/scrapes) is where you knock, and the JSON payload is what you say. Get comfortable with these three components, and you've mastered the first step to scraping any website.

Scraping a Website with a Python Script

With the setup handled, it's time to write some code. Let's walk through a complete Python script you can copy, paste, and run to see the Olostep API in action. We'll target a real-world e-commerce product page to provide a practical foundation.

We're going to use the requests library to send our POST request. It's the standard for making HTTP requests in Python. If you don't have it installed, open your terminal and run pip install requests.

Building and Sending the Request

The script below is heavily commented to explain each step, from setting up your API key and headers to building the JSON payload and handling the response. It also includes essential error handling—a crucial step many tutorials skip—to prevent your script from crashing on unexpected responses.

Here's the full script:

import os

import requests

import json

import time

# --- 1. Configuration ---

# Best practice: Store your API key as an environment variable.

# On Linux/macOS: export OLOSTEP_API_KEY='your_api_key'

# On Windows: set OLOSTEP_API_KEY='your_api_key'

API_KEY = os.getenv("OLOSTEP_API_KEY")

if not API_KEY:

raise ValueError("OLOSTEP_API_KEY environment variable not set. Please set it before running the script.")

# The single endpoint for all Olostep scraping requests.

SCRAPE_URL = "https://api.olostep.com/v1/scrapes"

# --- 2. Define Request Headers ---

# The Authorization header is required for authentication.

# The Content-Type header tells the API we're sending JSON data.

headers = {

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json"

}

# --- 3. Construct the JSON Payload ---

# This dictionary contains the instructions for the scraping job.

# We're telling Olostep to perform a GET request on the target URL.

payload = {

"url": "https://www.amazon.com/dp/B08P2H5L72", # Example product page

"method": "GET"

}

# --- 4. Send the POST Request with Retries ---

def send_scrape_request(max_retries=3, initial_delay=2):

"""Sends a request to Olostep with an exponential backoff retry mechanism."""

for attempt in range(max_retries):

try:

print(f"Sending scraping request (Attempt {attempt + 1}/{max_retries})...")

# Set a timeout to avoid hanging indefinitely

response = requests.post(SCRAPE_URL, headers=headers, json=payload, timeout=30)

# Check for common retryable server-side errors

if response.status_code in [429, 500, 502, 503, 504]:

print(f"Received status {response.status_code}. Retrying...")

raise requests.exceptions.HTTPError(f"Server error: {response.status_code}", response=response)

# For other non-successful status codes, raise an exception to stop

response.raise_for_status()

# --- 5. Process the Successful Response ---

print("Request successful!")

response_data = response.json()

html_content = response_data.get("content")

if html_content:

# For this example, we'll save the HTML to a file.

# In a real project, you would parse this with BeautifulSoup.

with open("product_page.html", "w", encoding="utf-8") as f:

f.write(html_content)

print("Successfully scraped the page. HTML content saved to product_page.html.")

else:

print("Scraping successful, but no HTML content was returned.")

print("Full API Response:", json.dumps(response_data, indent=2))

return response_data # Success, exit the loop

except requests.exceptions.HTTPError as http_err:

print(f"HTTP error occurred: {http_err}")

# Print the response body for debugging

if http_err.response:

print(f"Response Body: {http_err.response.text}")

except requests.exceptions.RequestException as req_err:

print(f"An error occurred during the request: {req_err}")

except Exception as err:

print(f"An unexpected error occurred: {err}")

# If an error occurred, wait before retrying

if attempt < max_retries - 1:

delay = initial_delay * (2 ** attempt)

print(f"Waiting {delay} seconds before next retry...")

time.sleep(delay)

else:

print("Max retries reached. Aborting.")

return None

# Execute the function

if __name__ == "__main__":

send_scrape_request()Understanding the Response

If your request is successful, the Olostep API will return a JSON object with a 200 OK status code. The most important key in this object is content, which holds the raw HTML of the target page.

Example Success Response Payload:

{

"id": "scr_1a2b3c4d5e6f7g8h",

"status": "completed",

"url": "https://www.amazon.com/dp/B08P2H5L72",

"method": "GET",

"statusCode": 200,

"content": "<!DOCTYPE html><html><head>...</html>",

"createdAt": "2024-10-26T10:00:00.000Z"

}From here, you can feed the content into a library like Beautiful Soup or lxml to parse it and extract the exact data you need, such as product titles, prices, or reviews. For a rundown of parsing libraries, check out our guide on the top scraping tools available today.

Pro Tip: Always check the HTTP status code of the response before parsing. A

200code means success. A4xxcode indicates a client-side error (like a bad API key or invalid payload), while a5xxcode points to a problem on the server's end. Our script handles this withresponse.raise_for_status()and a retry loop for server errors.

Bypassing Common Anti-Bot Defenses

Successful web scraping is a cat-and-mouse game. Websites use an arsenal of anti-bot defenses to block automated traffic. To scrape data reliably, your requests must blend in and appear as if they're coming from a regular user. This means moving beyond a basic GET request and adopting strategies that mimic human behavior.

Rate Limiting, IP Blocks, and User-Agents

The most common reasons a scraper gets blocked are:

- Rate Limiting: Sending too many requests from a single IP address in a short period.

- IP Reputation: Using datacenter IPs that are easily flagged as non-human.

- Browser Fingerprinting: Using a consistent, non-standard

User-Agentheader or lacking other typical browser headers.

This is where proxies become essential. By funneling requests through a pool of residential proxies, each request appears to come from a different device in a different location, making it nearly impossible to distinguish from legitimate traffic. Similarly, rotating through common, up-to-date User-Agent strings helps vary your digital fingerprint.

The good news is that a scraping API like Olostep handles this for you. By setting a few parameters in your API call, you can activate these features without managing the complex infrastructure yourself.

country_code: Specify a country (e.g.,"US") to route your request through a local proxy.premium_proxy: Set this totrueto use high-quality residential IPs, which are far less likely to be blocked.render_js: Set this totrueto have the API execute JavaScript on the page, just like a real browser. This is essential for scraping modern, dynamic websites built with frameworks like React or Vue.

Troubleshooting Common Failures

Even with advanced tools, you'll encounter errors. Here’s how to troubleshoot them:

- CAPTCHAs: If you receive HTML containing a CAPTCHA challenge, it means the site has detected bot-like behavior. Using

premium_proxy: trueandrender_js: trueoften helps, as the API's headless browser can solve many common challenges automatically. - 403 Forbidden: This error means your access is denied. It's often due to a poor IP reputation or a flagged

User-Agent. Rotate yourcountry_codeor ensure you are using premium residential proxies. - 429 Too Many Requests: You've hit a rate limit. The exponential backoff strategy in our Python script is the correct way to handle this. Politely wait and retry.

- 5xx Server Errors: These indicate a temporary problem with the target server. Our retry logic also handles these gracefully.

- Timeouts: The request took too long. This can happen with slow-loading, JavaScript-heavy pages. Ensure you have a reasonable timeout set in your

requestscall and consider ifrender_jsis necessary, as it can increase load times.

Choosing the Right Web Scraping API

With so many scraping APIs available, choosing the right one depends on your project's specific needs, budget, and scale. Let's compare how Olostep stacks up against other popular tools like ScrapingBee, Bright Data, ScraperAPI, and Scrapfly. This fair comparison will help you make an informed decision based on what truly matters: pricing, proxy quality, JavaScript rendering capabilities, and developer support.

Key Factors for Comparison

Your decision will likely hinge on a few critical features:

- Pricing Model: Pay-per-request, bandwidth-based, or a flat monthly subscription? Pay-as-you-go models offer flexibility for smaller projects, while subscriptions provide cost certainty at scale.

- Proxy Network Quality: The type and size of the proxy pool directly impact your success rate. Residential proxies are superior for bypassing blocks but are more expensive than datacenter IPs.

- JavaScript Rendering: Can the API handle modern sites built with React or Vue? For most e-commerce and social media scraping, this is non-negotiable.

- Developer Support: When your scraper fails at 2 AM, how quickly can you get help? Good documentation, responsive support channels, and clear error messages are invaluable.

A great API isn’t just about successful requests; it's about providing the tools and support to debug the failures. Clear error logs and responsive support can be more valuable than a slightly lower price point.

Comparison of Top Web Scraping APIs

This table offers a high-level overview of the competitive landscape. For a deeper dive into our offerings, you can explore the different Olostep scrapers tailored for specific use cases.

| API Provider | Key Features | Pricing Model | Best For |

|---|---|---|---|

| Olostep | AI-powered parsing, premium residential proxies, robust JS rendering, multi-format output (Markdown, PDF). | Credit-based (pay-as-you-go) with monthly subscriptions. | AI startups and developers needing structured, LLM-ready data with minimal post-processing. |

| ScrapingBee | Headless browser support, proxy rotation, and a focus on simplicity and ease of use. | Credit-based API calls with tiered monthly plans. | Small to medium-sized projects and developers who need a straightforward, reliable API. |

| Bright Data | Massive proxy network (residential, mobile), web unlocker tool, and extensive enterprise features. | Pay-per-use (by GB) and platform-specific subscriptions. | Large-scale enterprise operations requiring a vast and diverse proxy infrastructure. |

| ScraperAPI | Simple API with proxy management, CAPTCHA handling, and JS rendering. | Request-based monthly subscriptions. | Businesses needing a simple, scalable solution for standard web scraping tasks. |

| Scrapfly | Anti-scraping protection bypass, JS rendering with browser snapshots, and a focus on scraping at scale. | Subscription-based with credit overages. | Advanced users and businesses needing fine-grained control over browser behavior and anti-bot evasion. |

Scraping Data Ethically and Legally

Extracting public data is powerful, but it's not a free-for-all. How you scrape data from websites is as important as what you scrape. This section is not legal advice but a practical compass to keep your projects ethical and sustainable.

Respecting Website Rules and Rate Limits

- Check

robots.txt: Before scraping, always check the target site'srobots.txtfile (e.g.,https://example.com/robots.txt). This file outlines which parts of the site bots should avoid. While not legally binding, ignoring it is disrespectful and a quick way to get blocked. - Read the Terms of Service (ToS): The ToS often explicitly forbids automated data gathering. Violating these terms can have legal consequences.

- Scrape Politely: Don't overwhelm a website's server. Implement delays between your requests (our retry logic does this automatically on failure) to minimize your impact. A good starting point is one request every few seconds.

Navigating Data Privacy Laws

This is critical. You must be extremely careful about privacy.

- Avoid Personally Identifiable Information (PII): Do not collect names, email addresses, phone numbers, or other personal data unless you have a clear, legal basis for doing so.

- Comply with GDPR and CCPA: Laws like Europe's GDPR and California's CCPA have strict rules for handling personal data. These laws have a global reach and apply to any organization processing data of their residents.

Recent reports show that 86% of organizations are increasing their spending on regulatory compliance, driven by the complexity of these laws. For more details, see this web scraping market report.

Ethical Guideline: Adopt the principle of data minimization. Only scrape the absolute minimum, non-personal information you need. If it's not essential, don't collect it.

Your Web Scraping Checklist and Next Steps

You've learned the theory, seen the code, and understand the challenges. Here’s a concise checklist to guide your next scraping project:

- Define Your Goal: Clearly identify the data points you need to extract.

- Check

robots.txtand Terms of Service: Ensure your scraping is compliant and respectful. - Choose Your Tool: Decide between a DIY script and a scraping API like Olostep based on project complexity.

- Get Your API Key: Sign up for an account and securely store your credentials as environment variables.

- Build Your Request: Construct the JSON payload with the target URL and necessary parameters (

render_js,premium_proxy). - Implement Error Handling: Add robust try-except blocks and a retry mechanism with exponential backoff.

- Parse the Data: Use a library like Beautiful Soup to extract structured data from the returned HTML.

- Store and Use the Data: Save your cleaned data in a structured format (CSV, JSON, database) for analysis or integration.

Next Steps

Now that you have a solid foundation, it's time to apply these concepts to your own projects.

- Start Small: Pick a simple, static website to practice extracting basic information like headlines or prices.

- Tackle a Dynamic Site: Move on to a JavaScript-heavy site, like an e-commerce platform, and use the

render_jsfeature. - Scale Up: Explore scraping multiple pages or products in a loop, always being mindful of polite scraping practices.

Ready to start pulling clean, structured data without getting tangled in the technical weeds? Olostep provides a powerful API that handles all the messy stuff—proxies, CAPTCHAs, and JavaScript rendering—so you can just focus on the data.

Sign up for free and get 500 credits to start scraping today!