Every day, businesses need fresh, accurate data to make critical decisions, from monitoring competitor prices to training AI models. The problem? This valuable information is often trapped within the visual layout of websites, inaccessible for automated analysis. Learning how to scrape a website with Python is the key to unlocking this data, turning the unstructured web into a structured, actionable asset.

At its core, the process seems simple: send an HTTP request to a URL, get back the raw HTML, and then parse it to extract the specific information you need. However, the reality is far more complex. Modern websites are fortified with sophisticated anti-bot systems, dynamic JavaScript rendering, and legal terms that can stop a naive scraper in its tracks.

This guide will walk you through the entire process, from making your first simple request to building a robust, resilient scraper that can navigate the challenges of the modern web. We'll explore multiple approaches, weigh their pros and cons, and provide a complete, step-by-step solution using best practices.

Why Python is the Go-To for Web Scraping

While many languages can scrape websites, Python has become the undisputed industry standard. This is due to a powerful combination of simplicity, a vast ecosystem of specialized libraries, and a supportive community.

- Simplicity and Readability: Python's clean syntax makes it easy for beginners to pick up and for teams to maintain complex codebases.

- Rich Library Ecosystem: Libraries like Requests handle the complexities of HTTP communication, Beautiful Soup makes parsing messy HTML a breeze, and frameworks like Scrapy provide a complete toolkit for large-scale projects.

- Data Science Integration: Python is the language of data science. Once you've scraped your data, you can seamlessly feed it into powerful libraries like Pandas, NumPy, and Scikit-learn for analysis, visualization, and machine learning.

The business applications are endless:

- E-commerce: Automating price monitoring and tracking competitor inventory.

- Market Research: Aggregating product reviews, social media sentiment, and news articles to spot trends.

- Lead Generation: Extracting contact information from business directories and professional networks.

- AI & Machine Learning: Creating unique datasets for training large language models (LLMs) and predictive algorithms, a practice essential for turning websites into structured, LLM-ready data.

Approach 1: The DIY Scraper with Requests and Beautiful Soup

The classic approach, and the best place to start, is building a scraper from scratch using Python's most popular libraries: requests for fetching web pages and BeautifulSoup for parsing the HTML. This method is perfect for simple, static websites and provides a foundational understanding of the mechanics involved.

Step 1: Fetching the Web Page with Requests

Before we can extract any data, we need to get the website's HTML content. The requests library is the gold standard for this. It simplifies the process of sending HTTP requests and handling responses.

A robust script should always include error handling to manage network issues or bad server responses gracefully.

import requests

import time

# The URL of a static website we want to scrape

url = 'https://books.toscrape.com/'

# Best practice: Define headers to mimic a real browser

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

'Accept-Language': 'en-US,en;q=0.9',

'Accept-Encoding': 'gzip, deflate, br',

'Connection': 'keep-alive',

}

html_content = None

# Implement a simple retry mechanism with waits

for attempt in range(3): # Try up to 3 times

try:

# Send an HTTP GET request with a 10-second timeout

response = requests.get(url, headers=headers, timeout=10)

# This will raise an HTTPError for bad responses (4xx or 5xx)

response.raise_for_status()

# If we get here, the request was successful

html_content = response.text

print("Successfully fetched the page.")

break # Exit the loop on success

except requests.exceptions.Timeout:

print(f"Attempt {attempt + 1}: Request timed out. Retrying in 5 seconds...")

time.sleep(5)

except requests.exceptions.HTTPError as e:

print(f"Attempt {attempt + 1}: HTTP Error: {e.response.status_code}. Retrying in 5 seconds...")

time.sleep(5)

except requests.exceptions.RequestException as e:

# Catch any other network-related problems

print(f"Attempt {attempt + 1}: An error occurred: {e}. Retrying in 5 seconds...")

time.sleep(5)

if html_content is None:

print("Failed to fetch page after multiple attempts.")Wrapping the request in a try...except block with a retry loop makes the scraper resilient to temporary network failures or server-side issues.

Step 2: Parsing HTML with Beautiful Soup

The html_content we received is a massive string of HTML. We need a way to navigate this structure to find the specific data we want. This is where BeautifulSoup excels. It converts the raw HTML string into a Python object that can be easily searched and manipulated.

First, ensure you have the necessary libraries installed:

pip install requests beautifulsoup4

Now, let's parse the HTML we fetched.

from bs4 import BeautifulSoup

if html_content:

# Create a BeautifulSoup object to parse the HTML content

# 'lxml' is a faster and more robust parser than 'html.parser'

# To install: pip install lxml

soup = BeautifulSoup(html_content, 'lxml')

# Find all 'article' tags that have the class 'product_pod'

book_articles = soup.find_all('article', class_='product_pod')

extracted_data = []

# Loop through each book article found

for article in book_articles:

# The title is in an 'a' tag inside an 'h3' tag

title_tag = article.h3.a

# The actual text is stored in the 'title' attribute of the 'a' tag

title = title_tag['title'] if title_tag else 'N/A'

# Extract the price

price_tag = article.find('p', class_='price_color')

price = price_tag.text if price_tag else 'N/A'

# Extract the rating

rating_tag = article.find('p', class_='star-rating')

# The rating is stored in the class name, e.g., "star-rating Three"

rating = rating_tag['class'][1] if rating_tag and len(rating_tag['class']) > 1 else 'N/A'

extracted_data.append({

"title": title,

"price": price,

"rating": rating

})

# Print the first few results

import json

print(json.dumps(extracted_data[:3], indent=2))

else:

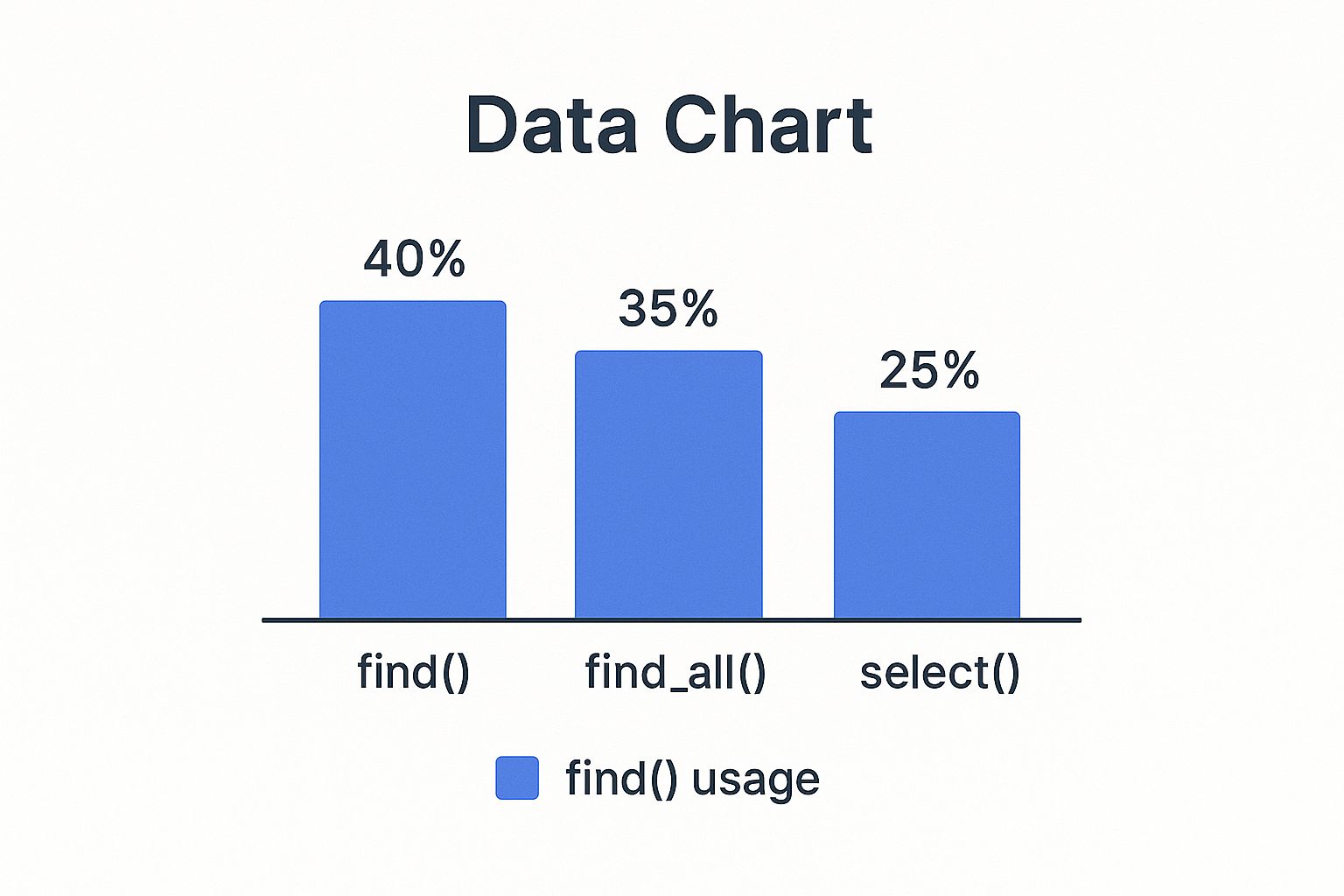

print("No HTML content to parse.")By inspecting the page's HTML (right-click -> Inspect in your browser), we can identify the tags and classes that contain our target data (title, price, rating) and use BeautifulSoup's methods like find_all() and find() to extract it.

Limitations of the DIY Approach

This method works well for simple sites, but it quickly breaks down when faced with:

- IP Blocks: Making many requests from a single IP address is a huge red flag for anti-bot systems.

- CAPTCHAs: Websites can present challenges that are difficult for automated scripts to solve.

- Dynamic Content: Many modern websites load data using JavaScript after the initial page load.

requestsonly gets the initial HTML and will miss this dynamic content. - Maintenance Overhead: If the website changes its layout, your scraper will break, requiring constant maintenance.

For any serious or scalable project, these roadblocks necessitate a more robust solution.

Approach 2: Using a Commercial Web Scraping API

A web scraping API acts as a managed layer between you and the target website. Instead of making direct requests, you send the URL you want to scrape to the API, and it handles all the difficult parts: proxy management, CAPTCHA solving, browser rendering, and header rotation. This makes your scraper more reliable, scalable, and significantly less likely to get blocked.

Step-by-Step Solution with the Olostep API

Let's refactor our previous example to use the Olostep API. This approach outsources the anti-bot battle, allowing us to focus purely on data extraction.

Making the API Request

The process involves sending a POST request to the Olostep API endpoint with your target URL in the JSON payload.

import requests

import json

import os

import time

from bs4 import BeautifulSoup

# Best practice: Store your API key as an environment variable

OLOSTEP_API_KEY = os.environ.get("OLOSTEP_API_KEY")

if not OLOSTEP_API_KEY:

raise ValueError("Please set the OLOSTEP_API_KEY environment variable.")

# Olostep API endpoint

api_url = "https://api.olostep.com/v1/scrapes"

# JSON payload specifying the target URL and enabling JavaScript rendering

payload = {

"url": "https://books.toscrape.com/",

"javascript": True # Enable browser rendering for dynamic sites

}

# Headers must include your API key for authentication

headers = {

"x-api-key": OLOSTEP_API_KEY,

"Content-Type": "application/json"

}

html_content = None

# Implement retry logic with exponential backoff

for attempt in range(4): # Try up to 4 times

try:

# Send the POST request to the API with a 60-second timeout

response = requests.post(api_url, headers=headers, json=payload, timeout=60)

# Check for client-side or server-side errors from the API

response.raise_for_status()

response_data = response.json()

html_content = response_data.get("data")

if html_content:

print("Successfully fetched HTML content via Olostep API.")

break # Exit loop on success

else:

# Handle cases where the API call succeeded but returned no data

print(f"API call successful, but no HTML content was returned. Response: {response_data}")

# Optionally, you might not want to retry this.

break

except requests.exceptions.HTTPError as e:

# Handle specific HTTP error codes

status_code = e.response.status_code

print(f"Attempt {attempt + 1}: HTTP Error {status_code} from Olostep API.")

if status_code in [400, 401, 403, 429]:

# For client errors or rate limiting, wait and retry

wait_time = 2 ** attempt # Exponential backoff: 1, 2, 4, 8 seconds

print(f"Retrying in {wait_time} seconds...")

time.sleep(wait_time)

elif status_code >= 500:

# For server errors, also retry with backoff

wait_time = 2 ** attempt

print(f"Server error. Retrying in {wait_time} seconds...")

time.sleep(wait_time)

else:

# For other errors, maybe break immediately

break

except requests.exceptions.RequestException as e:

wait_time = 2 ** attempt

print(f"Attempt {attempt + 1}: A network error occurred: {e}. Retrying in {wait_time} seconds...")

time.sleep(wait_time)

# --- The parsing logic remains the same ---

if html_content:

soup = BeautifulSoup(html_content, 'lxml')

book_articles = soup.find_all('article', class_='product_pod')

extracted_data = []

for article in book_articles:

title_tag = article.h3.a

title = title_tag['title'] if title_tag else 'N/A'

price_tag = article.find('p', class_='price_color')

price = price_tag.text if price_tag else 'N/A'

rating_tag = article.find('p', class_='star-rating')

rating = rating_tag['class'][1] if rating_tag and len(rating_tag['class']) > 1 else 'N/A'

extracted_data.append({"title": title, "price": price, "rating": rating})

print(json.dumps(extracted_data[:3], indent=2))

else:

print("Failed to fetch HTML content from Olostep API after multiple attempts.")Understanding the API Request and Response

Request Payload:

{

"url": "https://books.toscrape.com/",

"javascript": true,

"country": "us"

}url: The target URL to scrape.javascript: (Optional) Set totrueto render the page in a real browser, essential for dynamic sites.country: (Optional) Scrape from a specific geographic location.

Request Headers:

x-api-key: YOUR_OLOSTEP_API_KEY

Content-Type: application/jsonSuccessful Response Payload:

A successful response is a JSON object containing the scraped data and metadata.

{

"request_id": "req_a1b2c3d4e5f6",

"data": "<!DOCTYPE html><html><head>...</head><body>...</body></html>",

"status_code": 200,

"url": "https://books.toscrape.com/"

}data: The full HTML content of the page, which you can now feed into Beautiful Soup.status_code: The status code of the request made to the target website.request_id: A unique identifier for your API request, useful for debugging.

This API-driven approach follows modern API design best practices by providing a clean, predictable interface that abstracts away immense complexity.

Handling Anti-Bot Measures and Common Failures

Even with an API, understanding how websites block scrapers is crucial. Learning how to scrape a website with Python effectively means learning to think like an anti-bot system and proactively address its defenses.

Anti-Bot Guidance

- Rate Limits and Waits: Don't bombard a server with requests. Introduce delays (

time.sleep()) between requests to mimic human browsing speed. A scraper hitting hundreds of pages per second is an obvious bot. - Realistic Headers: Always set the

User-Agentheader to mimic a common web browser. Also consider including other headers likeAccept-LanguageandAccept-Encodingto appear more legitimate. - Proxy Rotation: Use a large pool of high-quality residential or datacenter proxies to avoid IP-based blocking. This is one of the biggest benefits of using a scraping API, as it's managed for you.

- Headless Browsers for JavaScript: For sites heavy with client-side rendering, a simple

requests.get()won't work. You need a headless browser (like those used by scraping APIs or libraries like Selenium and Playwright) to execute JavaScript and get the final HTML. For a deeper dive, read our guide on how to scrape without getting blocked.

Troubleshooting Common Failures

- CAPTCHAs: These are designed specifically to block bots. Solving them requires sophisticated services, which are often integrated into commercial scraping APIs.

- 403 Forbidden / 401 Unauthorized: Your request was denied. This often points to a banned IP, a bad

User-Agent, or missing authentication cookies. - 429 Too Many Requests: You've been rate-limited. The server is explicitly telling you to slow down. The best response is to implement exponential backoff—wait, then retry with a longer delay.

- 5xx Server Errors (e.g., 500, 502, 503): The problem is on the server's end. These are often temporary, making them perfect candidates for a retry mechanism.

- Timeouts: The server took too long to respond. This can be due to network issues or an overloaded server. A good retry strategy is also effective here.

Ethical and Legal Considerations

Just because you can scrape a website doesn’t always mean you should.

- Check

robots.txt: Always respect therobots.txtfile (e.g.,https://example.com/robots.txt). It outlines which parts of a site the owner does not want bots to access. - Read the Terms of Service: Many sites explicitly prohibit data scraping in their ToS. Violating these terms can lead to legal action.

- Avoid Personal Data: Scraping personally identifiable information (PII) is a huge legal and ethical risk, especially with regulations like GDPR and CCPA.

- Don't Overload Servers: Be a good internet citizen. Scrape at a reasonable pace to avoid impacting the website's performance for other users.

- Data is Copyrighted: The data you scrape is owned by the website. Be mindful of how you use it, especially for commercial purposes.

Comparison of Web Scraping Alternatives

The "build vs. buy" decision is central to any scraping project. Here's how leading solutions compare:

| Feature | DIY (Requests + BS4) | Olostep | ScrapingBee | Bright Data | ScraperAPI |

|---|---|---|---|---|---|

| Ease of Use | Low (Requires coding & infra management) | High (Simple API call) | High | Medium (Complex platform) | High |

| Scalability | Low to Medium (Self-managed) | High | High | Very High | High |

| Anti-Bot Handling | Manual (IP rotation, User-Agents) | Fully Managed | Fully Managed | Fully Managed | Fully Managed |

| JavaScript Rendering | No (Requires Selenium/Playwright) | Yes | Yes | Yes | Yes |

| Maintenance | High (Constant updates required) | None | None | Low | None |

| Cost | Low (Server costs only) | Pay-as-you-go | Subscription | Pay-per-use | Subscription |

| Ideal Use Case | Small projects, learning, static sites | Developers & AI startups needing reliable, structured data | General purpose scraping | Large-scale enterprise data extraction | Web developers, agencies |

Fair Comparison Note: While all these services offer robust features, the best choice depends on your specific needs. Olostep is designed for developers who need a simple, reliable API for structured data extraction without a complex platform. Bright Data offers a vast suite of tools for large enterprises but can have a steeper learning curve. ScrapingBee and ScraperAPI are strong, developer-friendly alternatives with different pricing models. Your choice should align with your project's scale, budget, and technical requirements. For projects that become too large or complex for an in-house team, professional web scraping services offer a fully managed outsourcing option.

Final Checklist and Next Steps

Before launching any scraping project, run through this checklist to ensure you're set up for success.

Pre-Scrape Checklist

- Define Your Goal: What specific data points do you need? Be precise.

- Analyze the Target: Use browser developer tools to inspect the site. Is the data in the static HTML, or is it loaded via JavaScript? Identify the target HTML tags, classes, and IDs.

- Check the Rules: Review

robots.txtand the Terms of Service. - Choose Your Strategy: Is a simple DIY script sufficient, or do you need the power of a scraping API to handle anti-bot measures?

- Plan for Data Storage: How will you store the extracted data? (e.g., CSV, JSON, database).

- Implement Error Handling: Build robust retry logic with exponential backoff for common HTTP errors and timeouts.

Next Steps

With the fundamentals covered, you can now explore more advanced topics:

- Large-Scale Scraping: Learn to use task queues (like Celery with Redis) to manage and distribute thousands of scraping jobs concurrently.

- Data Pipelines: Automate the entire process by creating pipelines that scrape data, clean it, and load it into a database (e.g., PostgreSQL, BigQuery) for analysis.

- Deployment: Deploy your scrapers to the cloud (e.g., using Docker on AWS or Google Cloud) to run on a schedule without manual intervention.

Web scraping is a powerful skill that unlocks a world of data. By starting with a solid foundation and choosing the right tools for the job, you can build reliable and scalable data extraction solutions.

Ready to build scrapers that just work? Get started with 500 free credits and see for yourself!