The Web is the backbone of most businesses, both AI-native applications and more traditional companies. In the next 2-5 years, the primary user of the web for work will switch from humans to AI agents. All businesses will deploy AI systems, and these systems will need to search, extract, and structure data from the Web.

The current workflows, search infrastructure, and developer tools around the Web still assume that a human is the primary operator. That’s about to change.

This shift will encompass all industries and open new markets.

To build a magical product in this category we have to make key decisions at the research, infrastructure, and product layers.

The web's next primary user is an AI agent

In the next few years, the primary user of the web for work will shift from humans to AI agents. The web is the OS system of work and where the digital economy lives. We are looking at a trillion dollar market poised for change.

Some of the use cases include deep research to automate knowledge work, recruiting (finding information on candidates), finance (historical market data and real-time news), CRM enrichment, referencing technical documentation for Coding Agents, monitoring the Web for changes (market intelligence, competitor analysis, e-commerce), powering AI application grounded on real-world data and facts (AI brand visibility, detecting IP infringement, building vertical AI search engines), etc.

Jason Cui and the a16z team describe this shift well in their recent piece on the emerging "search wars" for agents, where new entrants are rebuilding indexing, crawling, and API layers from scratch for machine consumption

This is going to be the defining technology of our decade and it will give rise to exciting, new possibilities.

Every business deploying AI will need ways to routinely search, extract, and structure knowledge from the living web. AI is allowing us to reimagine the search market for the first time since Google emerged as the clear winner in the previous era. We are at the dawn of a new era. And there are a few significant differences that favour emerging players over the incumbents.

Why search for AI is different than search for humans?

Traditionally, the outcome of search for humans is a series of links. We can then click on these links, browse on websites, read the content and extract the information we need.

Search engines' primary concern is (or at least should be, before we account for ads) on crawling, indexing and reranking websites and then matching the keywords when a query is submitted to serve the series of links that are most relevant to the query. Since most of us have limited time and patience, we want results returned in milliseconds and usually consult only the first few links

So a search engine for humans has to optimize indexing and retrieval to be fast, accurate and relevant. The search experience ends when these links are returned.

There were several popular search engines before Google (Yahoo! Search, Lycos, Altavista, Inktomi, etc.) but the PageRank algorithm to rank websites authority by treating the Web as a graph of trust signals proved ,at the time, to be the most effective technology.

But AIs have significant differences:

- AI can navigate on hundreds or thousands of websites and cross-reference information (Deep research)

- AIs don't need just a series of links. They need to navigate and extract the most relevant information directly from websites even though it might not match the exact query keywords

- AI queries can be significantly longer and more complex than human queries

- AIs need to structure this data so it's compatible with businesses' backends and can be used in chained workflows typical of a reasoning agent

So while search for humans needs to optimize on just returning relevant results when a query is submitted (e.g. search as commonly intended), search for AI is more encompassing, agentic and needs to operate on 3 different levels:

Search, extract and structure data

Since this space is enormous, we need to be disciplined when tackling it.

Search: Depth over breadth. Dedicated, domain-specific indexes

When Arslan was building the early data pipelines at Legora, a legal-tech company now valued at $1.8B, one of the biggest bottleneck was building the infrastructure to crawl and search relevant information on the Web. They had to manually build specific crawlers for each country/state/law firm and then stream that data into specific siloed indexes for AI to reference and search on top of. It was a long, costly, and tedious process that required significant engineering lift. All businesses that want to embed AI will have to go through a similar process. Or adopt a product that does it on their behalf.

Traditional web indexes are breadth-first: they cover a bit of everything but they don't go deep in any one domain. Agents running inside real businesses need the opposite. A procurement agent at a global manufacturer, a legal research co-pilot, or an e-commerce automation agent needs domain-specific depth with deterministic schemas.

That’s why at Olostep we focus on company-specific, hyper-specialized indexes. We partner with teams to build vertical slices of the web that cover exactly what their agents require: regulatory filings, supplier catalogs, product detail pages, or local inventory feeds.

Extract: vertically integrated infrastructure

The web is a constantly changing organism. During our time building Zecento, one of Italy's most widely used e-commerce productivity products, we watched product pages update every few hours. Indexes don't account for the fast-paced changes on the Web. To provide the most up-to-date and accurate data to Agents, we have invested in building our own vertically integrated stack to extract real-time data from the Web: document rendering and normalization, distributed infrastructure, proxy rotation and parser versioning. Owning the entire pipeline gives us a significant flexibility, reliability and cost advantage (up to 70%).

Structure: parsers for deterministic outputs

Agents need structured data compatible with the systems they operate on and their backends. Passing markdown to an LLM is not deterministic for business needs. This is why we have developed a memory layer and the Olostep parser framework to chunk documents, extract typed fields, and apply proprietary algorithms that turn messy HTML into schema aligned JSON. The results can be fed directly to CRMs, knowledge graphs, databases and downstream AI reasoning layers.

We are encoding all the use cases we see and our learnings in a specialized model.

Olostep: API to search, extract and structure Data

We are still in the early innings, but AI is already changing how we search and work on the Web.

The ceiling of what can be done in the space is extremely high and we often talk internally about the great work ahead we have the opportunity to do: from deploying scheduled research agents and automated workflows on our infrastructure to advancing search capabilities, data extraction and structuring, and developing custom models trained specifically for these tasks

The space will evolve a lot in the next several years. Our goals is to be at each stage the best solution for AI to interface with the Web.

This field is so fascinating that it's easy to get lost in the theoretical realm of it and start optimizing for benchmarks.

At Olostep, the only benchmark we care about is a simple on: Can we deliver a magical experience to our customers by offering them the most reliable, accurate, scalable and cost-effective solution for their use case?

Customers are switching and sticking with Olostep because the answer is consistently yes. And every day, we work to keep it that way.

If you’re building AI agents or want to bring autonomous research into your organization, we’d love to chat to see if we can be of any assistance to you to search, extract, and structure the data that matters most.

What will be the interface to the Web for AI?

A follow-up to the question of Search for AI is: What will be the interface for this? For humans, browsers have become synonymous with search. Will the same apply to AIs?

Will AIs use APIs or a browser? or both?

As we transition from a Web that simply answers our questions to an agentic Web that anticipates our needs, entirely new possibilities and scenarios emerge.

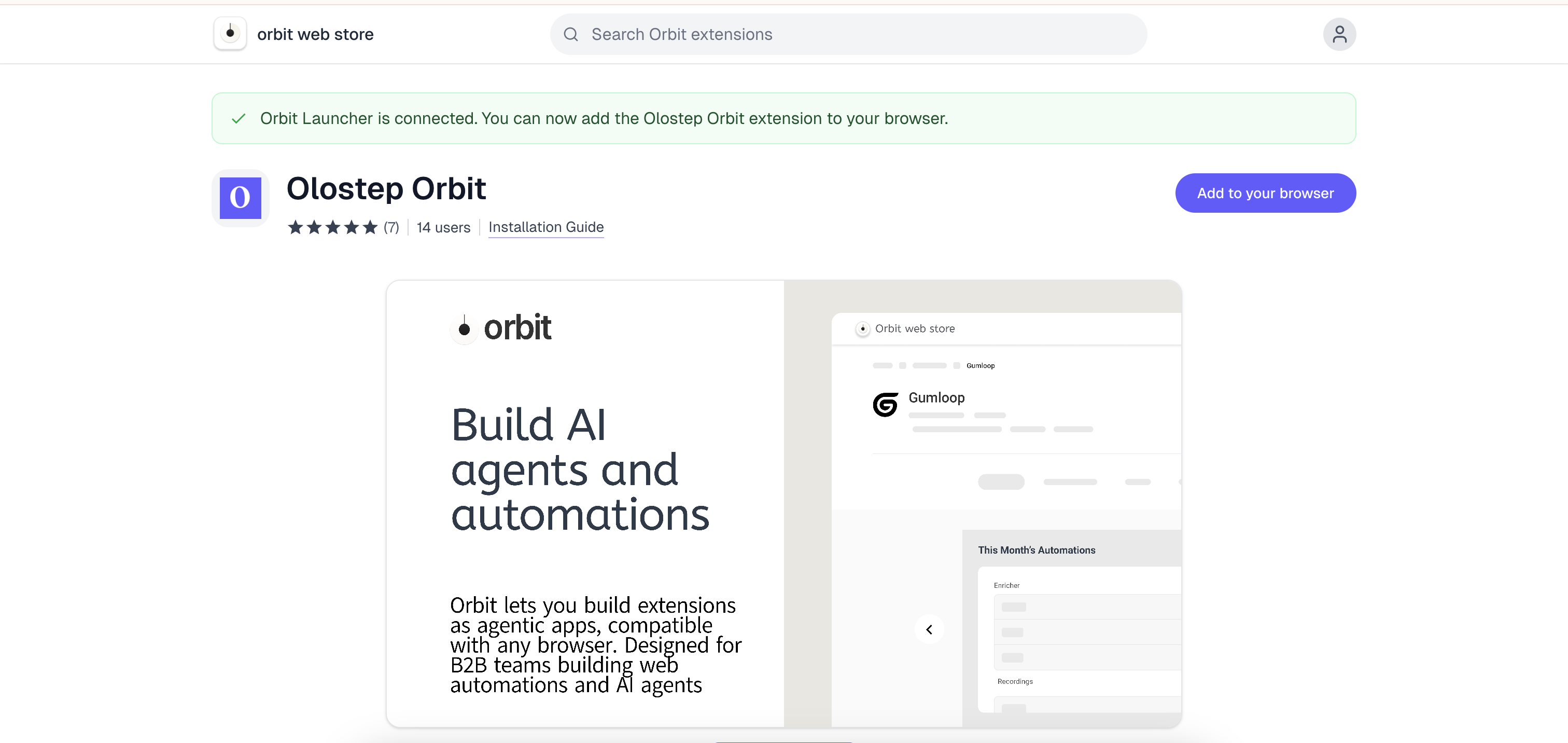

We believe the browser for AI agents, especially for businesses, will be different than the current form factors we are seeing.

We have been working closely with startups and companies on this. In 2026, we are launching Orbit.