Extracting data from websites is a critical skill for any business looking to gain a competitive edge. The modern web is the world's largest database, but this information is unstructured, locked away in HTML. To leverage it for price tracking, lead generation, or market research, you must first solve the core problem: how do you turn messy web pages into clean, structured, and actionable data?

This guide explores the primary approaches to solving this problem. You can either build and maintain your own web scraper to parse HTML directly, a path that offers total control but comes with significant technical hurdles. Alternatively, you can use a dedicated web scraping API that handles the complex infrastructure of data extraction, delivering structured data through a simple request. We'll walk through both concepts, provide a step-by-step solution using an API, and cover the critical details of error handling, anti-bot countermeasures, and ethical best practices.

Why Web Data Extraction is a Business Superpower

In a market where information is everything, the ability to systematically extract data from websites isn't just a technical capability—it's a core business strategy. This process is the engine that powers dynamic pricing models, the source of high-quality sales leads, and the lens required to truly understand market sentiment.

The practice has become standard for any data-driven organization. Data from Statista indicates that the web scraping market is projected to grow significantly, reflecting its increasing adoption. For instance, automated data scraping can dramatically cut down manual data collection time, translating into massive operational savings and faster time-to-insight.

From Raw HTML to Actionable Intelligence

At its heart, web data extraction gives you access to the same information your competitors and customers see, but at a scale and speed no human could ever match. This process transforms messy, unstructured web content into a clean, organized format like JSON, which is ready for analysis, database ingestion, or use in AI applications.

Our guide on turning websites into LLM-ready data dives deep into how this structured information becomes incredibly powerful for training AI models and fueling advanced analytics.

Consider an e-commerce business that automatically tracks competitor prices several times a day to optimize its own pricing strategy. Or a marketing team that scrapes social media and news articles for real-time sentiment analysis, allowing them to get ahead of a potential brand crisis. These are not futuristic concepts; they are practical applications powered by web data extraction.

Choosing Your Data Extraction Method

When you decide to extract web data, you face a critical choice: build your own manual scraper or use a specialized API. Each path has distinct trade-offs depending on your project's scale, your team's technical expertise, and your requirements for reliability and maintenance.

| Method | Best For | Technical Effort | Scalability & Maintenance |

|---|---|---|---|

| Manual Scraping | Small-scale projects, learning, and situations requiring total control. | High. You are responsible for everything: IP rotation, CAPTCHAs, user agents, JavaScript rendering, and adapting to website layout changes. | Low. Becomes a significant maintenance burden as you add more sites or increase frequency. Highly brittle. |

| Scraping API | Large-scale projects, reliable data pipelines, and teams that need to move fast. | Low. The API manages all the complex anti-bot and infrastructure challenges for you. | High. Built from the ground up to handle millions of requests reliably with minimal maintenance from your side. |

Let's break these down further:

-

Manual Web Scraping: This approach involves writing your own scripts, typically using libraries like Python's Requests for HTTP requests and Beautiful Soup for HTML parsing. It provides complete control but makes you responsible for every technical challenge: IP blocks, CAPTCHAs, browser fingerprinting, and constant updates when websites change their structure. It's an excellent way to learn the fundamentals but is often unsustainable for business-critical operations.

-

API-Based Extraction: Using a service like the Olostep API abstracts away the complexities. You send a simple API request with the target URL, and the service handles proxy rotation, browser rendering, and parsing. In return, you receive clean, structured data. This approach is designed for scale, reliability, and speed, allowing your team to focus on using data rather than acquiring it.

Key Takeaway: While building your own scraper offers total customization, an API-based solution provides the scalability and resilience needed for serious projects. It frees you from the constant cat-and-mouse game of anti-bot workarounds and lets you get to the insights faster.

Step 1: Preparing Your Python Environment

Before extracting data, a solid foundation is essential. This means setting up a Python environment with the necessary tools. A clean, properly configured environment is your best defense against unexpected errors.

Our primary tool for interacting with the Olostep API is the requests library. It simplifies the process of sending HTTP requests in Python. If you don't have Python installed, visit the official Python website to download the latest version.

Installing the Requests Library

With Python installed, you can add the requests library using pip, Python's package installer. Open your terminal or command prompt and run this command:

pip install requestsThis single command downloads and installs the library, making it available for your scripts.

Securing Your API Key

To use the Olostep API, you'll need an API key. This key authenticates your requests and must be kept confidential.

CRITICAL: Never hardcode your API key directly in your scripts. Committing code with an exposed key to a public repository like GitHub will lead to unauthorized use of your account.

The professional standard for managing sensitive credentials is to use environment variables. This practice stores the key separately from your code, allowing your script to access it securely at runtime.

Storing the API Key as an Environment Variable

The method for setting an environment variable differs by operating system:

- macOS/Linux: Open your terminal and use the

exportcommand. To make it permanent, add this line to your~/.bashrcor~/.zshrcfile.export OLOSTEP_API_KEY="your_api_key_here" - Windows (Command Prompt): Use the

setcommand. For a permanent setting, add it through the "Edit the system environment variables" control panel.set OLOSTEP_API_KEY="your_api_key_here"

Accessing the API Key in Python

Once the key is stored, you can access it in your Python script using the built-in os library. This is the standard practice for secure credential management.

import os

# Get the API key from environment variables

api_key = os.getenv("OLOSTEP_API_KEY")

# Fail fast if the key is not found

if not api_key:

raise ValueError("API key not found. Please set the OLOSTEP_API_KEY environment variable.")

print("API key loaded successfully!")This check ensures your script is robust. It won't attempt to run without the necessary credentials, preventing failed requests and providing a clear error message. With your environment ready and credentials secure, you can proceed to your first data extraction.

Step 2: Executing Your First Data Extraction with Olostep API

With the environment configured, it's time to extract live data. We will build a complete Python script that sends a request to the Olostep API to scrape an e-commerce product page. This example demonstrates the core mechanics of turning a webpage into structured JSON.

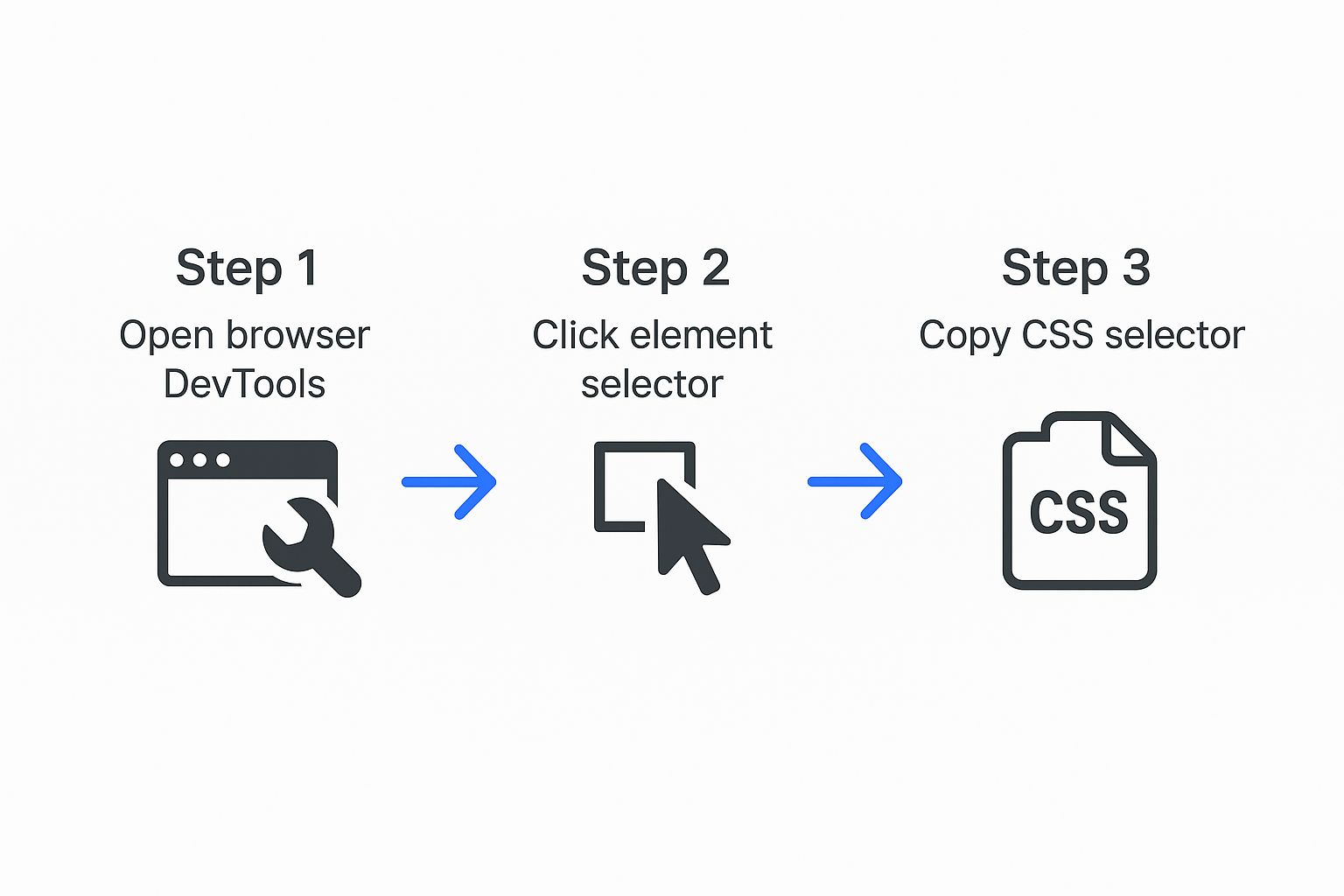

Identifying What to Scrape with CSS Selectors

Before writing code, you must identify the specific data points you want to extract. The most common way to do this is with CSS selectors, which are patterns used to select specific HTML elements on a page, such as the product title, price, or description.

Modern browsers provide developer tools that make finding these selectors simple.

The process is straightforward:

- Right-click the element you want to scrape and select "Inspect."

- The developer tools will open, highlighting the corresponding HTML.

- Right-click the highlighted HTML, go to "Copy," and select "Copy selector."

This provides the precise path for the API to locate your target data.

Building the API Request in Python

Now, let's construct the API request. We will use the requests library to send a POST request to the https://api.olostep.com/v1/scrapes endpoint. The body of our request will be a JSON object containing the target URL and the extraction instructions (our CSS selectors).

This complete, copy-pasteable script targets a sample product page to extract its title and price.

import os

import requests

import json

# Retrieve your API key from environment variables for security

api_key = os.getenv("OLOSTEP_API_KEY")

if not api_key:

raise ValueError("API key not found. Please set the OLOSTEP_API_KEY environment variable.")

# Define the API endpoint and headers

api_url = "https://api.olostep.com/v1/scrapes"

headers = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json"

}

# Define the payload with the target URL and CSS selectors

payload = {

"url": "https://books.toscrape.com/catalogue/a-light-in-the-attic_1000/index.html",

"parsing_instructions": {

"title": {

"selector": "h1"

},

"price": {

"selector": ".price_color"

}

}

}

# Execute the POST request with error handling

try:

response = requests.post(api_url, headers=headers, json=payload, timeout=30)

# Raise an exception for bad status codes (4xx or 5xx)

response.raise_for_status()

# Print the full JSON response from the API, formatted for readability

print(json.dumps(response.json(), indent=2))

except requests.exceptions.HTTPError as errh:

print(f"HTTP Error: {errh}")

# Print the response body if available, as it may contain error details

if errh.response:

print(f"Response Body: {errh.response.text}")

except requests.exceptions.ConnectionError as errc:

print(f"Error Connecting: {errc}")

except requests.exceptions.Timeout as errt:

print(f"Timeout Error: {errt}")

except requests.exceptions.RequestException as err:

print(f"An unexpected error occurred: {err}")Understanding the API Response

When you run the script, the Olostep API performs the heavy lifting: it navigates to the URL, handles any anti-bot measures, renders the page, applies your CSS selectors, and returns a structured JSON object.

A successful response payload will look like this:

{

"request_id": "scr-rq-12345abcdef",

"data": {

"url": "https://books.toscrape.com/catalogue/a-light-in-the-attic_1000/index.html",

"parsed_data": {

"price": "£51.77",

"title": "A Light in the Attic"

},

"full_html": "<!DOCTYPE html>..."

},

"status": "completed",

"credits_used": 1

}The response structure is designed for clarity:

request_id: A unique identifier for your API call, useful for debugging.data: The main container for the extracted content.parsed_data: Your target data, organized by the keys you defined (title,price).full_html: The complete HTML source code of the page, available for more complex, client-side parsing if needed.status: Confirms the request wascompletedsuccessfully.credits_used: Shows the API credits consumed by the request.

Key Takeaway: The API delivers both a

parsed_dataobject for immediate use and thefull_htmlsource for flexibility. This dual output supports both quick extractions and deeper, more complex data processing workflows.

Once you have successfully extracted data, the next logical step is to integrate it into a larger system. For more on this, see this excellent guide to building data pipelines, which explains how to turn raw scraped data into a valuable, continuously flowing asset.

Step 3: Overcoming Anti-Bot Defenses and Roadblocks

Extracting data is rarely a simple, unobstructed task. Websites deploy sophisticated defenses to distinguish between human users and automated bots. These measures—such as CAPTCHAs, IP rate limiting, and JavaScript challenges—can stop a basic scraper instantly.

This is the central challenge of manual scraping. It's also where a professional scraping API provides immense value. Instead of building and maintaining a complex infrastructure to bypass these defenses, you delegate the problem to a specialized service.

A robust API handles this automatically:

- Proxy Management: It rotates requests through a massive pool of residential and datacenter proxies, making it nearly impossible for a target website to block your scraper based on its IP address.

- Browser Fingerprinting: It mimics real web browsers by sending legitimate-looking user agents and other headers, appearing as a genuine user.

- CAPTCHA Solving: It integrates automated solvers to handle CAPTCHAs without manual intervention.

By offloading this "cat-and-mouse" game, you can focus on data analysis rather than evasion. Our guide on how to scrape without getting blocked offers a deeper dive into these strategies.

Handling Common HTTP Errors with Retries

Even with a powerful API, you must build resilience into your code. Networks are unreliable, and servers can fail. A production-ready script must handle transient errors gracefully instead of crashing.

The most common errors you will encounter are HTTP status codes like 403 Forbidden, 429 Too Many Requests, and various 5xx server errors. A 403 may indicate a blocked request, while a 429 is an explicit signal to reduce your request rate. 5xx errors indicate a problem on the server's end.

Key Takeaway: The best practice for handling temporary failures is to implement a retry mechanism with exponential backoff. This strategy involves waiting for progressively longer intervals between retries, giving the server (and your connection) time to recover without overwhelming it.

Implementing an Exponential Backoff Strategy in Python

A robust retry logic prevents your script from failing at the first sign of trouble. The Python function below wraps the API call with logic to automatically retry on specific HTTP errors, backing off exponentially after each failure. This is a battle-tested approach for building reliable data extraction processes.

import requests

import time

import os

import json

def scrape_with_retries(payload, max_retries=5, timeout=30):

"""

Sends a request to the Olostep API with a retry mechanism.

Args:

payload (dict): The request payload.

max_retries (int): The maximum number of retry attempts.

timeout (int): The request timeout in seconds.

Returns:

dict: The JSON response from the API, or None if all retries fail.

"""

api_key = os.getenv("OLOSTEP_API_KEY")

if not api_key:

raise ValueError("API key not found. Please set the OLOSTEP_API_KEY environment variable.")

api_url = "https://api.olostep.com/v1/scrapes"

headers = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json"

}

# Status codes that warrant a retry

retryable_status_codes = {429, 500, 502, 503, 504}

backoff_factor = 2

wait_time = 1 # Initial wait time in seconds

for attempt in range(max_retries):

try:

response = requests.post(api_url, headers=headers, json=payload, timeout=timeout)

# If the status code is retryable, raise an HTTPError to trigger the retry logic

if response.status_code in retryable_status_codes:

response.raise_for_status()

# If the request was successful (e.g., 200 OK), return the JSON data

return response.json()

except requests.exceptions.HTTPError as e:

print(f"Attempt {attempt + 1}/{max_retries} failed with status {e.response.status_code}. Retrying in {wait_time} seconds...")

except requests.exceptions.RequestException as e:

print(f"Attempt {attempt + 1}/{max_retries} failed with a connection error: {e}. Retrying in {wait_time} seconds...")

time.sleep(wait_time)

# Increase wait time for the next attempt (exponential backoff)

wait_time *= backoff_factor

print(f"Failed to fetch data after {max_retries} attempts.")

return None

# --- Example Usage ---

if __name__ == "__main__":

scrape_payload = {

"url": "https://books.toscrape.com/catalogue/a-light-in-the-attic_1000/index.html",

"parsing_instructions": {

"title": {"selector": "h1"},

"price": {"selector": ".price_color"}

}

}

data = scrape_with_retries(scrape_payload)

if data:

print("\nSuccessfully extracted data:")

print(json.dumps(data, indent=2))By incorporating this retry logic, you transform a fragile script into a resilient data extraction tool prepared for the unpredictable nature of the web.

Evaluating Data Extraction Tools and APIs

Choosing the right tool is one of the most critical decisions in a data extraction project. The market is filled with excellent options, each with its own strengths, weaknesses, and ideal use cases. Your goal is not to find the single "best" API but the one that best fits your project requirements, technical comfort, and budget.

To make an informed decision, you must compare core features directly. By 2025, the landscape for website data extraction has become highly specialized, with different tools optimized for various data types and scalability needs. APIs are the standard for reliable, real-time, and structured data delivery at scale.

A Side-by-Side Comparison of Leading Scraping APIs

Let's compare Olostep with other popular services like ScrapingBee, Bright Data, ScraperAPI, and Scrapfly. Each service addresses the core problem of simplifying web scraping but with different philosophies, feature sets, and pricing models.

For a more comprehensive analysis, check out our complete guide to the top scraping tools available today.

| Feature | Olostep | ScrapingBee | Bright Data | ScraperAPI | Scrapfly |

|---|---|---|---|---|---|

| Pricing Model | Credit-based, pay-as-you-go | Subscription-based, credits per request | Enterprise-focused, usage-based | Subscription-based, credits per request | Subscription-based, bandwidth & features |

| JavaScript Rendering | Yes (standard) | Yes (consumes more credits) | Yes (via Scraping Browser) | Yes (premium parameter) | Yes (built-in) |

| Residential Proxies | Yes (included) | Yes (premium feature) | Yes (core offering) | Yes (included in all plans) | Yes (add-on) |

| CAPTCHA Solving | Yes (automated) | Yes (automated) | Yes (automated) | Yes (automated) | Yes (automated) |

| Ease of Use | Very High | High | Moderate (powerful but complex) | High | High |

This table provides a high-level overview to help you weigh your options based on the features that matter most for your project.

Key Differentiators to Consider

Beyond a feature checklist, consider the user experience and underlying architecture.

- Olostep: Designed for simplicity and predictability. Its credit-based system is straightforward: one credit for a simple request, with clear costs for advanced features. This makes it ideal for developers and startups who need a reliable, no-fuss solution that is easy to budget.

- ScrapingBee: A flexible and well-documented competitor. However, premium features like residential proxies and JavaScript rendering can consume credits quickly, making cost forecasting for complex projects more challenging.

- Bright Data: An enterprise-grade powerhouse with a massive proxy network and a suite of advanced tools. Its power and complexity are suited for large organizations with significant data extraction needs and budgets.

- ScraperAPI: Known for its generous free tier and ease of use, making it a popular starting point. Similar to ScrapingBee, costs can increase with the use of premium features.

- Scrapfly: Offers advanced features like an anti-scraping protection bypass and a focus on scraping at scale, with pricing tied to bandwidth and feature usage.

Your choice ultimately depends on your project's scale and complexity. For more on using programmatic interfaces for data collection, this API-based data collection automation guide is an excellent resource.

Scraping Data Responsibly and Ethically

Knowing how to extract data from websites is a powerful skill that comes with the responsibility to be a good digital citizen. Just because data is publicly visible does not mean it is a free-for-all. Adhering to an ethical framework ensures your projects are sustainable and keeps you out of legal trouble.

The first step is to consult the robots.txt file, located at the root of a domain (e.g., example.com/robots.txt). This file outlines the site owner's rules for automated crawlers. Respecting robots.txt is the absolute baseline of ethical scraping.

Navigating Legal and Privacy Boundaries

After robots.txt, review the website's Terms of Service (ToS). Many ToS documents explicitly prohibit automated data gathering. While the legal precedent can be complex (e.g., the hiQ Labs v. LinkedIn case), violating the ToS can lead to IP blocks or legal action.

Furthermore, you must comply with data privacy regulations like the GDPR in Europe and the CCPA in California. These laws impose strict rules on the collection and handling of personally identifiable information (PII).

A Golden Rule: Never scrape data that is behind a login, protected by a paywall, or contains personal details like names, emails, or phone numbers, unless you have explicit consent. The legal and ethical risks are not worth it.

Practical Checklist for Ethical Scraping

Follow this checklist to ensure your scraping activities are responsible.

- Respect the Request Rate: Do not overwhelm a server with rapid-fire requests. Introduce random delays (e.g., 1–5 seconds) between requests to mimic human behavior and reduce server load.

- Identify Your Bot: Be transparent by setting a descriptive User-Agent string in your request headers. For example:

"MyProject-Scraper/1.0 (contact@myproject.com)". This identifies you and provides a contact method. - Scrape During Off-Peak Hours: Run large scraping jobs during times of low traffic for the target website, such as late at night.

- Cache Data: Avoid re-scraping the same page if the data has not changed. Store a local copy and only request a fresh version when necessary.

The web scraping market is growing rapidly due to its immense value. Some web scraping industry trends project the market to expand significantly in the coming years. This growth underscores the importance of adopting ethical practices to ensure the web remains an open and accessible resource.

Troubleshooting Common Failures

- CAPTCHAs: If you are scraping manually, CAPTCHAs will stop your script. A scraping API with built-in solvers is the most effective solution.

403 Forbidden/429 Too Many Requests: These errors indicate your IP has been flagged or you are making requests too quickly. Use rotating proxies and implement rate limiting with exponential backoff.5xxServer Errors: These are server-side issues. Your best response is a retry mechanism, as the problem is likely temporary.- Timeouts: The target server may be slow to respond. Increase the timeout parameter in your HTTP client and implement retries.

Final Checklist and Next Steps

You've learned the fundamentals of extracting data from websites. Here's a concise checklist to guide your projects:

- Define Your Goal: Clearly identify the data you need and how you will use it.

- Choose Your Method: Decide between manual scraping and a scraping API based on your project's scale and complexity.

- Set Up Your Environment: Install necessary libraries and secure your API keys using environment variables.

- Inspect Your Target: Use browser developer tools to find the correct CSS selectors.

- Build with Resilience: Implement robust error handling and a retry mechanism with exponential backoff.

- Scrape Ethically: Always check

robots.txtand the Terms of Service, respect rate limits, and avoid personal data. - Process and Store Data: Plan how you will clean, transform, and store the extracted data for analysis.

Next Steps:

- Start small with a single, simple website to practice your skills.

- Explore integrating your scraped data into a database or a data visualization tool.

- Scale your efforts by parameterizing your scripts to handle multiple URLs and targets.

Ready to stop wrestling with messy web pages and start working with clean, structured data? Olostep provides a powerful, developer-friendly API that handles all the hard parts for you. You can start extracting data in minutes, not weeks. Get started for free with 500 credits.